From Silence to Symphony Bridging the Gap Between Musical Vision and Reality

Every creative medium has its moment of transformation—when technology shifts from being a specialist’s tool to becoming accessible to anyone with an idea. Photography experienced this with digital cameras and smartphones. Video production went through it with affordable editing software and high-quality phone cameras. Writing saw it with word processors and online publishing platforms. Now, music creation stands at a similar crossroads, with AI-powered generation tools fundamentally altering who can participate in musical expression.

The significance extends beyond mere convenience. For generations, music has been uniquely gatekept among creative mediums. While anyone could pick up a pen and write, or a camera and photograph, creating music required instruments, training, and technical knowledge that placed it beyond casual experimentation. AI Song Generator represent a fundamental shift in this dynamic, raising questions about creativity, authenticity, and the future relationship between human intention and technological capability.

The Psychological Barrier of Musical “Talent”

The Myth of Innate Musical Ability

Western culture has long perpetuated the notion that musical ability is innate—something people either possess or lack. This belief creates a psychological barrier that prevents many from even attempting music creation. Someone might try painting despite limited skill, viewing it as exploration. But music carries different expectations; attempting to create it without “talent” feels presumptuous.

This psychological barrier often proves more limiting than any technical one. People who could learn basic music production given time and resources never attempt it because they’ve internalized the belief that music isn’t “for them.” The result is countless unexpressed musical ideas, not because creation was technically impossible, but because it felt psychologically inappropriate.

AI music generation disrupts this psychological barrier by reframing music creation as idea articulation rather than technical execution. Someone doesn’t need to believe they have musical talent to describe what they want music to accomplish. This subtle shift—from “making music” to “describing music”—removes the psychological obstacle that prevented exploration.

The Confidence Gap in Creative Expression

Related to the talent myth is a confidence gap that affects how people approach different creative mediums. The same person who confidently writes social media posts, takes photos, or designs presentations might feel completely inadequate attempting to create even simple music.

This confidence gap has practical consequences. A marketing professional developing a campaign might have clear ideas about the sonic identity needed but feel unqualified to articulate those ideas to a composer, much less attempt creation themselves. The result is either abandoned ideas or compromised visions filtered through multiple layers of translation and interpretation.

AI generation addresses this confidence gap by accepting natural language descriptions without judgment. There’s no “wrong” way to describe what music should feel like, no technical terminology required, no sense of being evaluated by an expert. This psychological safety encourages experimentation that traditional production environments often inhibit.

The Economics of Musical Scarcity

How Scarcity Shaped Music Consumption

For most of recorded music history, music creation was scarce and consumption was abundant. Recording, producing, and distributing music required significant resources, creating natural scarcity. This scarcity gave music economic value and shaped entire industries around controlling and monetizing that scarcity.

The digital revolution disrupted distribution scarcity—suddenly anyone could access millions of songs instantly. But creation scarcity remained. Making original music still required skills, equipment, and time that kept it limited to a relatively small group of practitioners.

AI generation potentially eliminates creation scarcity entirely. If anyone can generate custom music on demand, what happens to the economic models built on creation being scarce? This question has profound implications for how music is valued, compensated, and integrated into other creative work.

The Custom Music Economics

Consider the economics of custom music before AI generation. A small business wanting original music for a promotional video faced several options: hire a composer for $1,000-5,000, use stock music for $50-200, or attempt DIY production with uncertain results.

This pricing structure meant custom music was reserved for projects with substantial budgets. The vast majority of small-scale creative work—local business videos, educational content, personal projects—relied on stock music or went without.

AI generation introduces a new economic tier: custom music at near-zero marginal cost. This doesn’t just make existing use cases cheaper; it enables entirely new applications that weren’t economically viable before. A teacher can now have unique music for every lesson. A small nonprofit can have original music for each campaign. A hobbyist can score their entire video series with custom tracks.

This expansion of what’s economically feasible represents genuine democratization—not just making existing options cheaper, but making previously impossible options possible.

Practical Applications Transforming Creative Workflows

The Iterative Creative Process

Traditional music commissioning follows a linear path: brief the composer, wait for delivery, provide feedback, wait for revisions. This process works but lacks the iterative spontaneity that characterizes other creative work.

Compare this to graphic design, where a designer can try multiple color schemes, layouts, and typography choices in minutes, seeing results immediately and iterating based on what works. Music production has never allowed this kind of rapid iteration for non-specialists.

AI Song Maker introduces iterative workflow to music creation. A video editor can generate music, edit it into their project, realize it doesn’t quite match the pacing, generate a variation with adjusted tempo, test that version, and continue refining—all within the time previously spent waiting for a single composer revision.

This iterative capability changes how music integrates into creative projects. Rather than music being added at the end to fit existing content, it can evolve alongside that content, with each informing the other throughout the creative process.

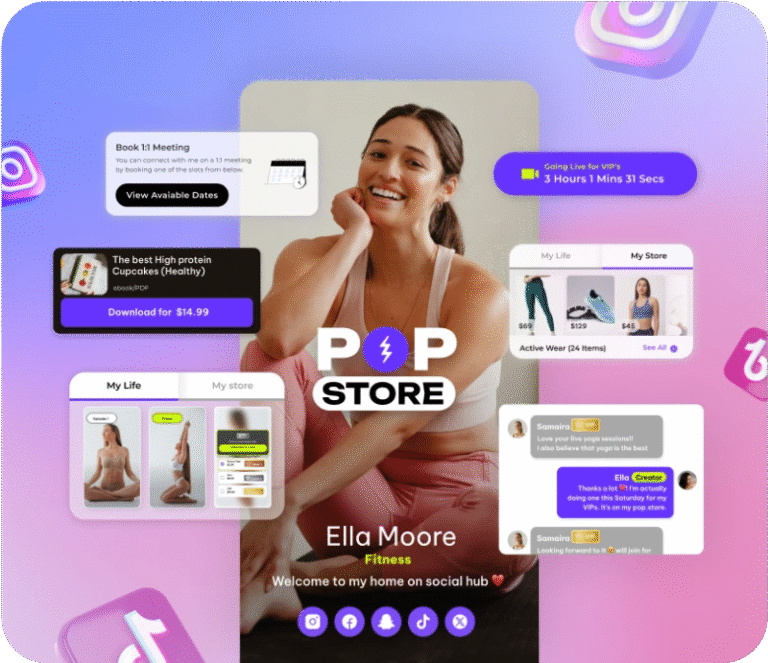

Personalization at Scale

Marketing and content creation increasingly emphasize personalization—tailoring messages to specific audience segments. But music has remained largely one-size-fits-all due to production constraints.

AI generation enables musical personalization previously impossible. A fitness app could generate workout music matching each user’s preferred tempo and energy level. An educational platform could create background music optimized for different learning styles. A meditation app could generate soundscapes tailored to individual preferences and session lengths.

Testing these applications reveals both possibilities and limitations. The technology handles personalization of basic parameters—tempo, energy, instrumentation—quite well. More subtle personalization based on complex preferences or cultural context proves more challenging. But even basic personalization represents a significant advance over static, universal music choices.

Collaborative Creativity Across Disciplines

Perhaps the most interesting application involves collaboration between specialists in different creative fields. A choreographer with no music training can generate tracks matching specific movement sequences. A visual artist can create soundscapes complementing their installations. A writer can produce audio atmospheres enhancing their storytelling.

These cross-disciplinary applications were always conceptually possible but practically difficult. Collaboration between specialists in different fields requires extensive communication, translation of ideas across disciplinary languages, and significant coordination overhead.

AI generation reduces this friction by allowing each specialist to work more independently while still creating integrated multi-sensory experiences. The choreographer doesn’t need to explain their vision to a composer—they can generate music directly and iterate until it matches their movement concepts.

The Authenticity Question

What Makes Music “Real”

AI-generated music raises philosophical questions about authenticity. If music is created by algorithms rather than human hands, is it “real” music? Does it have less value or meaning?

These questions echo debates from previous technological disruptions. When synthesizers emerged, purists argued they weren’t “real” instruments. When drum machines appeared, critics claimed they couldn’t replicate human feel. When Auto-Tune became widespread, debates erupted about authentic vocal performance.

Each time, the answer proved more nuanced than binary real/fake distinctions. Synthesizers didn’t replace acoustic instruments but expanded sonic possibilities. Drum machines enabled new genres while acoustic drums remained valued. Auto-Tune became another production tool, used well or poorly depending on context and intention.

AI-generated music likely follows a similar path—not replacing human composition but serving different purposes and enabling different applications. The music generated for a small business video doesn’t need the same authenticity as a carefully crafted album; it needs to serve a functional purpose effectively.

The Human Element in Curation

Even when AI generates music, human judgment remains central. Someone decides what to generate, evaluates whether the output serves its purpose, selects among multiple variations, and determines how the music integrates into larger projects.

This curatorial role represents genuine creative contribution. The person directing AI generation brings taste, judgment, and contextual understanding that the AI lacks. They’re not just pressing a button—they’re making creative decisions about what serves their project’s needs.

This suggests that AI generation doesn’t eliminate human creativity from music—it shifts which aspects of creativity matter most. Technical execution becomes automated, while conceptual direction, quality evaluation, and contextual integration remain human responsibilities.

The Path Forward

The integration of AI into music creation is inevitable and already underway. The relevant questions aren’t whether this should happen but how it happens—what norms develop around use, how economic models adapt, what new creative possibilities emerge, and how human musicians evolve their roles.

For individuals and organizations needing functional music for creative projects, AI generation solves real problems and removes genuine barriers. The teacher can finally have custom music for lessons. The small business can develop sonic branding. The content creator can produce original soundtracks. These applications don’t threaten artistic music creation—they serve different needs that were previously unmet.

For professional musicians, the challenge involves distinguishing what AI can replicate from what requires human artistry. Technical proficiency alone may no longer suffice as a differentiator, but artistic vision, emotional depth, cultural authenticity, and the ability to take creative risks remain firmly human territory.

The future of music creation likely involves both AI and human contributions, each serving different purposes and sometimes working in combination. The melody that once existed only in imagination can now find expression, and that expansion of creative possibility—whatever complications it introduces—represents a fundamental shift in who gets to participate in the ancient human practice of making music.

Disclaimer

This article is intended for informational and educational purposes only. It reflects general observations and opinions about AI-powered music generation and its impact on creativity, technology, and the creative economy. It does not constitute legal, financial, or professional advice of any kind.

Any references to AI song generators, music-making tools, or related technologies are used for illustrative purposes only and do not imply endorsement, affiliation, or sponsorship with any specific company, platform, or product. Readers should conduct their own research and review applicable terms of service, copyright laws, and licensing agreements before using any AI-generated music for commercial or public purposes.